Bernoulli Lemniscate was one beautiful curves in Mathematics. The curve, which in some sense kick started the study of elliptic functions, has a lot of interesting properties and some of those will be subject of this post.

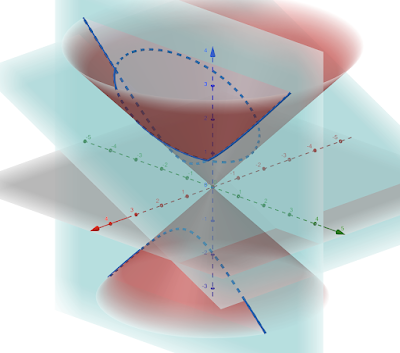

Bernoulli Lemniscate can be defined in atleast two ways. It is the locus of a point $P$ such that the product of its distance from two points, say $P_1$ and $P_2$, stays a constant. Or we as the inverse of the hyperbola $x^2-y^2=1$ w.r.t the unit circle which we will be using in this post.

.png) |

| $DG \cdot DF = \text{constant}$ $F \equiv (1/\sqrt{2},0)$, $G\equiv (-1/\sqrt{2},0)$ |

One of the first things that we might want to do is to construct a tangent at a given point on the Lemniscate.

A useful property of the hyperbola that comes in handy here is the fact that the line from the origin drawn perpendicular to the tangent of hyperbola intersects the hyperbola again at a point which the reflection of the tangent point w.r.t the $x$-axis. Also, the point of intersection of the tangent and the perpendicular line is inverse of the reflected point w.r.t the unit circle.

.png) |

| $I$ and $D'$ are reflections w.r.t $x$ axis $I$ and $H$ are reflections w.r.t the unit circle |

The inverse of the tangent line shown above w.r.t the unit circle becomes a circle passing through the origin. Because the tangent line intersects the blue line intersects at $H$, their corresponding inverses intersect at $I$ - which is the inverse of $H$ - showing that the inverted circle also passes through $I$. Note that $BI$ is the diameter of the circle.

For a given point $D$ on the lemniscate, we can extend the radial line $BD$ so that it intersects the hyperbola at $D'$. As the green line is the tangent to the hyperbola at $D'$, we know that the green circle must be tangent to the lemniscate at $D$. As $BD$ is a chord on the circle, the point of intersection of their perpendicular bisector (not shown in the picture below) with the diameter $BI$ gives the center of the circle $E$.

As the lemniscate and the green circle are tangent at $D$, they share a common tangent at that point. With the circle's center known, it is now trivial to draw both the tangent and normal to the lemniscate at $D$.

In summary, to draw the tangent at point $D$ on the lemniscate, extend the radial line to find $D'$ on the hyperbola. Reflect it w.r.t the $x$-axis to get $I$. We can then locate $E$ as the intersection of $BI$ and the perpendicular bisector of $BD$. Then $ED$ is the normal to the lemniscate at $D$ from which the tangent follows trivially.

Let $D \equiv (r,\theta)$ be the polar coordinates in the following figure. Because $D$ and $D'$ are inverses w.r.t the unit circle, we have $BD'=1/r$. As $D'$ and $I$ are reflections, we have $BI=BD'=1/r$ and $\angle D'BI=2\theta$.

Because $BI$ is the diameter of the circle, we know that $BD \perp DI$. Therefore using this right angled triangle, we can see that $\cos 2\theta=r/(1/r)$, thereby showing the polar equation of the lemniscate is $r^2=\cos 2\theta$.

Also, $DE$ (which is a normal to the lemniscate at $D$) and $EB$ are radius to the same circle, we have $\angle BDE=2\theta$ showing that the angle between the radial line and normal is twice the radial angle. This result is called the Vechtmann theorem which is mostly proved using calculus.

Vechtmann theorem shows that the normal to the lemniscate makes an angle of $3\theta$ with the $x$-axis i.e. three times the radial angle. Therefore, we can see that the angle between two normals (and hence two tangents) at two given points is three times the difference between the radial angle of those points.

Time to go infinitesimal. Consider two infinitesimally close points $D \equiv (r,\theta)$ and $J$. Let $DM$ be a line perpendicular to the radial line $BJ$.

From the infinitesimal right $\triangle DMN$ in the figure above, we easily see that $DM \approx r d\theta$, $DN \approx ds$ and $MN \approx dr$. Also, because the angle between the radial line and the normal at $D$ is $2\theta$, we have $\angle MDN=2\theta$. Therefore,The radius of curvature $R$ for a curve is given by the relation $ds=R\cdot d\varphi$ where $\varphi$ is the tangential angle (the angle a tangent makes with a common reference line). Therefore, change in tangential angle between two points is just the angle between the tangents.

We just saw that for a lemniscate, the tangential angle is thrice the polar angle. Therefore, $d\phi=3 d\theta$. The above three relations clearly show that the curvature $\kappa$, the inverse of the radius of curvature $R$, is $3r$.

Going back again to the infinitesimal triangle, we have

$\displaystyle \sin 2\theta=\frac{dr}{ds} \implies ds=\frac{dr}{\sqrt{1-r^4}}$

again using the polar equation of the lemniscate. It is this relation that made the lemniscate an extremely important, and the first, curve to be studied in the context of elliptic integrals.

If we think about it, the idea that makes this all work is the fact that polar equation of the lemniscate is of a very specific form. In fact, we can generalize the same to see that curves defined by the polar equation

$r^n=\cos n\theta$

are all amenable to a similar approach with which we can see that their tangential angles is $(n+1)\theta$, their curvature is $(n+1)r^{n-1}$ and their differential arc length is

$\displaystyle ds=\frac{dr}{\sqrt{1-r^{2n}}}$

These curves are called the Sinusoidal spirals, which includes circles, parabolas, hyperbolas among others as special cases, and have many other interesting properties as well which I leave the readers to explore.

Yours Aye

Me

.png)

.png)

.png)

.png)