I write, therefore I am

Friday, June 20, 2025

Wednesday, March 12, 2025

Sphere on a freely spinning turntable

Tuesday, January 14, 2025

An interesting Probability problem

Tuesday, December 31, 2024

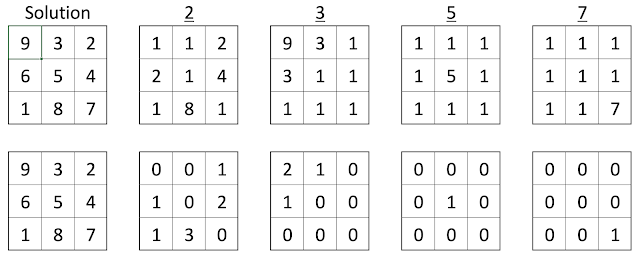

Monkey Dog Problem - Grid Multiplication

|

| A Solution for $5 \times 5$ grid |

|

| A Solution for $6 \times 6$ grid |

|

| A Solution for $7 \times 7$ grid |

|

| A Solution for $8 \times 8$ grid |

from ortools.linear_solver import pywraplp

import numpy as np

def main():

# Data

n = 3

vals = []

# primes = [2, 3, 5, 7, 11, 13, 17, 19, 23]

# primes = [2, 3, 5, 7, 11, 13, 17, 19, 23, 29, 31, 37, 41, 43, 47]

# primes = [2, 3, 5, 7, 11, 13, 17, 19, 23, 29, 31, 37, 41, 43, 47, 53, 59, 61]

primes = [2, 3, 5, 7]

primepi = len(primes)

def vals(i0, k):

i, cnt, p = i0, 0, primes[k]

while i % p == 0:

i //= p

cnt += 1

return cnt

# Solver

# Create the mip solver with the SCIP backend.

solver = pywraplp.Solver.CreateSolver("SCIP")

x = {}

for i in range(n * n):

for j in range(n * n):

x[i, j] = solver.IntVar(0, 1, "")

for i in range(n * n):

solver.Add(solver.Sum([x[i, j] for j in range(n * n)]) == 1)

for j in range(n * n):

solver.Add(solver.Sum([x[i, j] for i in range(n * n)]) == 1)

y = {}

for i in range(n * n):

for k in range(primepi):

y[i, k] = solver.Sum([x[i, j] * vals(j + 1, k) for j in range(n * n)])

rowsum = {}

for i in range(n):

for k in range(primepi):

rowsum[i, k] = solver.Sum([y[j, k] for j in range(n * i, n * i + n)])

colsum = {}

for i in range(n):

for k in range(primepi):

colsum[i, k] = solver.Sum([y[j, k] for j in range(i, n * n, n)])

for i in range(n):

for k in range(primepi):

solver.Add(rowsum[i, k] == colsum[i, k])

solver.Minimize(1)

status = solver.Solve()

# Print solution.

if status == pywraplp.Solver.OPTIMAL or status == pywraplp.Solver.FEASIBLE:

res = []

for i in range(n * n):

for j in range(n * n):

if x[i, j].solution_value() > 0:

res += [j + 1]

print(res)

else:

print("No solution found.")

if __name__ == "__main__":

main()Saturday, November 30, 2024

Five ways of solving an Expected Value problem

A Geometric Optimisation problem

Consider two circles $A(B)$ and $C(B)$ intersecting at $B$ and $D$, and a line passing through $D$. Let this line intersect the circle $A(B)$ at $F$ and the circle $C(B)$ at $G$.

The question of maximising the arithmetic mean of $DF$ and $DG$ is well known. It is also known that Euclidean construction of the line that maximises the Harmonic mean of $DF$ and $DG$ is impossible. We already saw the construction of the line that maximises the Geometric mean of $DF$ and $DG$ in this blog.

In this post we are interested in constructing the line such that the Quadratic mean of $DF$ and $DG$ is maximised. In fact, we solve a slightly more general problem. For a given $\theta$, we construct the line such that $\sqrt{DF^2+DG^2-2 \cdot DF \cdot DG \cdot \cos \theta}$ is maximised.

We first use some auxiliary points and lines that helps us in the final solution. For a given $\theta$, construct points $D'$ and $D'_1$ such that $\angle DAD'=\angle DCD'_1=\theta$. Construct the circle that passes through $D$, $B$ and $D'_1$.

For any point $E$ on this circle, let the line $ED'$ intersect circle $A(B)$ at $F$. Let $G$ be likewise on circle $C(B)$. Construct line $FG$. We first show that the line $FG$ passes through $D$.

We know that $\angle FD'B+\angle FDB=\pi$ because they are opposite angles in a cyclic quadrilateral. But $\angle FD'B=\angle ED'B=\pi-\angle ED'_1B$ because $\angle ED'B$ and $\angle ED'_1B$ are opposite angles of a cyclic quadrilateral. Now, $\angle FD'B=\angle BD'_1G=\angle BDG$.

Therefore, $\angle BDG + \angle FDB=\pi$ which shows that $F$, $D$ and $G$ are collinear.

Now, by construction, $\theta / 2=\angle D'BD=\angle D'_1BD$ and therefore, $\angle D'BD'_1=\theta$. This shows that $\angle D'ED'_1=\pi-\theta$.

We know that $\angle DGD'_1=\angle DBD'_1=\theta/2$ because they are angles in a same segment of a circle. Because $\angle DAD'=\theta$, we can see that $\angle DFD'=\pi-\theta/2$, and therefore $\angle DFE=\theta/2$.

Thus we have shown that $\triangle FEG$ is isosceles with $FE=EG$. Therefore, we can quickly see that $FG=2EF\cos(\theta/2)$.

Using Stewart's theorem on this triangle for the cevian DE, we have,

$EF^2=DE^2+DF\cdot DG$

Multiplying by $(2\cos(\theta/2))^2$ on both sides and simplifying, we get

$(2\cos(\theta/2))^2DE^2=FG^2-2DF\cdot DG \cdot 2\cos^2(\theta/2)$

Using the fact that $FG=DF+DG$ and $\cos\theta=2\cos^2(\theta/2)-1$,

$(2\cos(\theta/2))^2DE^2=DF^2+DG^2-2\cdot DF \cdot DG \cdot \cos\theta$

As $\theta$ is given, the above expression shows that maximising the RHS amounts to maximising $DE$. But this can be easily achieved. We just draw the line joining $D$ and center of circle $D'BD'_1$. The point at which this line intersects the circle gives us $E$ from which we can trivially construct the line $FG$ using what we've described before.

Hope you enjoyed this. See ya soon.

Until then, Yours Aye

Me

Tuesday, September 3, 2024

An Odd Sum

Someone in our puzzle group recently posted a question which asked to evaluate the following sum.

$$\frac{1}{e^{\pi}+1}+\frac{3}{e^{3\pi}+1}+\frac{5}{e^{5\pi}+1}+\cdots$$

My first instinct was to check the sum with Wolfram Alpha which showed me that this sum equals $1/24$. This made me curious.

I recast the sum into the following form.

$$\frac{e^{-\pi}}{1+e^{-\pi}}+\frac{3e^{-3\pi}}{1+e^{-3\pi}}+\frac{5e^{-5\pi}}{1+e^{5\pi}}+\cdots$$

The individual terms reminded me of Lambert series which eventually led me into rabbit hole that is to be the content of this post.

Let's define the following function $f(q)$ such that

$\displaystyle f(q)=\frac{q}{1+q}+\frac{3q^3}{1+q^3}+\frac{5q^5}{1+q^5}+\cdots$

Then,

$\displaystyle -f(-q)=\frac{q}{1-q}+\frac{3q^3}{1-q^3}+\frac{5q^5}{1-q^5}+\cdots$

Expanding using the lambert series for divisor functions, we see

$\displaystyle -f(-q)=\sigma_{\text{odd}}(1)q+\sigma_{\text{odd}}(2)q^2+\sigma_{\text{odd}}(3)q^3+\cdots=\sum_n \sigma_{\text{odd}}(n)q^n$

where $\sigma_{\text{odd}}(n)$ where is sum-of-odd-divisors function.

The sum-of-odd-divisors functions is intimately tied to the number of representations of an integer by a sum of four squares (Jacobi four square theorem) and by sum of four triangular numbers (Legendre). See Analogues on two classical theorems on representations of a number and The Parents of Jacobi’s Four Squares Theorem Are Unique, for example.

Therefore,

\displaystyle -f(-q) &= \sum_n \sigma_{\text{odd}}(n)q^n \\ &=\frac{1}{24} \left[8\sum_{n\text{ odd}}\sigma_{\text{odd}}(n)q^n + 24\sum_{n\text{ even}}\sigma_{\text{odd}}(n)q^n + 16\sum_{n\text{ odd}}\sigma_{\text{odd}}(n)q^n \right] \\ &= \frac{1}{24}\left(\sum_n r_4(n)q^n+\sum_n t_4(n) q^n \right) \\ &= \frac{\vartheta_2(q)^4+\vartheta_3(q)^4-1}{24}\end{align}$

$\displaystyle \vartheta_3(q)=\sum_{n=-\infty}^\infty q^{n^2}=\phi(q^2)\prod_{n \text{ odd}}(1+q^n)^2$ and

$\displaystyle \vartheta_2(q)=\sum_{n=-\infty}^\infty q^{(n+1/2)^2}=2\phi(q^2)q^{1/4}\prod_{n \text{ even}}(1+q^n)^2$

where $\displaystyle\phi(q)=\prod_{n}(1-q^n)$ is the Euler function.

Note that

$\displaystyle \prod_{n\text{ odd}}(1-q^n)=\frac{\phi(q)}{\phi(q^2)}$ and $\displaystyle \prod_{n}(1+q^n)=\frac{\phi(q^2)}{\phi(q)}$

Therefore,

$\displaystyle \vartheta_3(-q)=\phi(q^2)\frac{\phi(q)}{\phi(q^2)}\frac{\phi(q)}{\phi(q^2)}$ and $\displaystyle \vartheta_2(-q)=2\phi(q^2)(-q)^{1/4}\frac{\phi(q^4)}{\phi(q^2)}\frac{\phi(q^4)}{\phi(q^2)}$

Now,

$\displaystyle\sqrt\frac{\vartheta_2(-q)}{\vartheta_3(-q)}=\sqrt{2}(-q)^{1/8}\frac{\phi(q^4)}{\phi(q)}$

Putting $q=e^{-\pi}$ in the above expression, we have

$\displaystyle\sqrt\frac{\vartheta_2(-e^{-\pi})}{\vartheta_3(-e^{-\pi})}=\sqrt{2}(-1)^{1/8}e^{-\pi/8}\frac{\phi(e^{-4\pi})}{\phi(e^{-\pi})}$

Using the special values of the Euler function, we see that $\displaystyle \phi(e^{-4\pi})=\frac{e^{\pi/8}}{\sqrt{2}}\phi(e^{-\pi})$

Then,

$\displaystyle\sqrt\frac{\vartheta_2(-e^{-\pi})}{\vartheta_3(-e^{-\pi})}=(-1)^{1/8} \implies \vartheta_2(-e^{-\pi})^4+\vartheta_3(-e^{-\pi})^4=0$

Returning to our expression for $f(q)$, we see that $24f(e^{-\pi})=1-\vartheta_2(-e^{-\pi})^4-\vartheta_3(-e^{-\pi})^4=1$

As $f(e^{-\pi})$ is essentially the sum that we started with, we finally see that

$$\displaystyle\frac{1}{e^{\pi}+1}+\frac{3}{e^{3\pi}+1}+\frac{5}{e^{5\pi}+1}+\cdots=\frac{1}{24}$$

Phew!

As an aside, we could probably arrive the result a little quicker by using some results like

$\displaystyle\lambda(-1+i)=\frac{\vartheta_2(e^{(-1+i)\pi})^4}{\vartheta_3(e^{(-1+i)\pi})^4}=\frac{\vartheta_2(-e^{-\pi})^4}{\vartheta_3(-e^{-\pi})^4}=-1$ (or) $G_1=1\text{ and }g_1=2^{-1/8}$

where $\lambda(n)$ is the Modular lambda function and $g\text{ and }G$ are the Ramanujan g- and G- functions. But I didn't want to bring in additional exotic functions into the problem especially when I can't find a source for the above values of these functions.

Hope you enjoyed this. See ya soon.

Until then, Yours Aye

Me

.png)